Ingesting the documentation into chatGPT or similar would be an interesting opportunity?

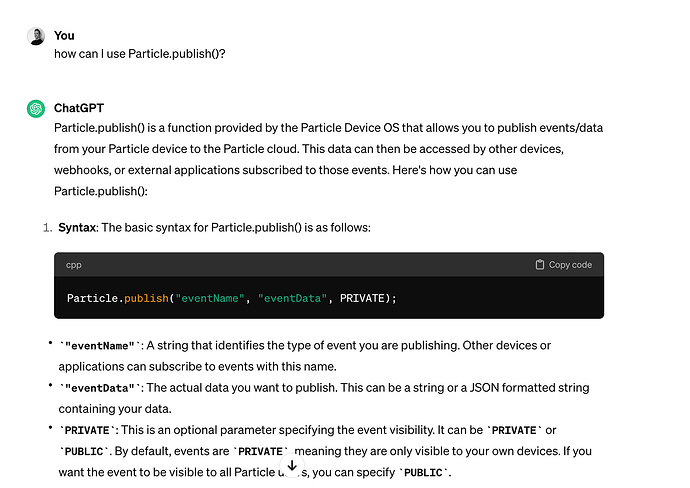

Hey, I asked chatGPT about Particle.publish() and it gave me a decent answer:

I also asked about a timer and it replied ok:

How is your experience?

Best

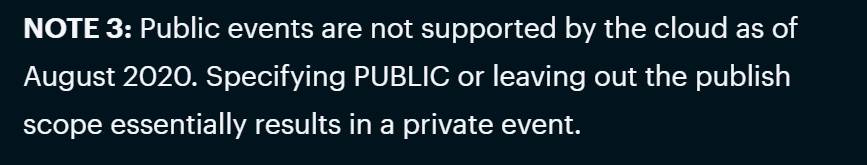

Although that assessment about the meaning of 'PUBLIC' publishes isn't quite correct (anymore).

Consequently, these LLMs can give you a decent hint but when it comes to exact answers, the original sources should be consulted - fortunately these LLMs often also provide some useful links.

Recently I had a fruitless discussion with one of these about a problem I just couldn't get to work myself. Trying to explain the issue at hand and what I already tried didn't stop the LLM from dishing out all kinds of "solutions" I already tried and dismissed and sometimes even came up with "solutions" that wouldn't do any of the stuff I had asked for.

When explaining to it, why they wouldn't work it just kept apologizing for "misunderstanding" but dishing out a "corrected" version that was exactly the same as the one I just dismantled.

For (near) boilerplate solutions and for getting a new point of view when you got stuck in a mental rut, I do consult an LLM from time to time. But ![]() , they haven't made us redundant yet

, they haven't made us redundant yet ![]()

![]()

Thanks @gusgonnet @ScruffR

@gusgonnet My interest was in a Particle documentation specific trained LLM for the reasons @ScruffR explained (depends upon which chatGPT model is being used as to when the cut-off in publicly available internet content was - hence nothing for latest release of Device OS).

ChatParticle? I assume a lightweight foundation model could be used with training on/access to a vector database of all the Particle documentation and references and libraries.

Sorry @ScruffR that you didn't receive an Alpha Go move!

Hey! i asked chatGPT about it and according to chatGPT ingesting documentation into ChatGPT or similar AI models presents a fascinating opportunity with several potential benefits. By feeding documentation into ChatGPT, we could:

- Facilitate Instant Access to Information: Users could quickly access relevant information by querying the AI model, saving time and effort typically spent searching through lengthy documentation.

- Enhance User Experience: Integrating documentation into ChatGPT would streamline the user experience, allowing for natural language queries and responses, which can be more intuitive and user-friendly.

- Improve Learning and Understanding: ChatGPT could assist users in comprehending complex topics by providing explanations and examples in a conversational manner, aiding in learning and knowledge retention.

- Support Problem Solving: Users could seek guidance from ChatGPT on troubleshooting issues or resolving queries, benefiting from the AI model's ability to analyze and provide solutions based on the ingested documentation.

- Enable Automation and Assistance: ChatGPT could automate certain tasks by referencing the ingested documentation, such as generating code snippets, writing responses to common inquiries, or providing step-by-step instructions.

Overall, ingesting documentation into ChatGPT presents an exciting opportunity to leverage AI for knowledge management, support, and problem-solving, ultimately enhancing user productivity and satisfaction.

@Brielleariaa12 Welcome to the community - and great first post!

I just had some fun arguing with MS Copilot about a mathematical question ![]()

For the question "For which x does (sqrt(x) + sqrt(-x) = 8) hold true?"

I got

- there is no solution. The sum of a square root of a real and a complex number cannot make a real number.

After hinting that x may not be a real number it appologized and gave me

- 8 = 0

After suggesting that this cannot be true it appologized and gave me

- x = 16i

When stating that this would actually result in a value of 5.65685... instead of 8 it appologized but insisted that this would actually give 0.

The correct solution would have been x = 32i ![]()

So much about the reliability of LLMs - never trust, always double check!

Ditto MS Copilot in Windows 11.

Not as scientific as your question, I asked it for working days per month over the next year for a proposal estimate - the total of working days was wrong first time, asked again, still wrong but closer. I asked it to take account of public holidays and it gave me an answer (slightly different again) with the caveat that public holidays had not been accounted for in the answer!

I personally would love to see an extension to Workbench right within VS Code. I use github co-pilot extension today and it’s a better user experience than copy/pasting code back and forth to chat GPT. I also can just highlight lines of code and ask it to explain it to me.

I haven't used github copilot for particle specific questions but I would suspect it would do a decent job. I’ll try and play with it later on and report back.

I have been doing some research and there is an open source chat interface called LMstudio.ai which you can use to install a selected model on your machine (apparently works better with machines with GPUs available - so Apple M1/M2/M3 devices) you install AnythingLLM and then ingest the document(s) you want to have a chat interface with. Not yet tried it yet but might get time free soon. It would be great to have the latest Particle documents available like this and might take a load off of Rick?

I would be interested to hear how you get on with Github Copilot.

Further research - for those based in the US - you could try NotebookLM from Google if you want to have a research assistant on the Particle documentation?