Hi all,

My use case is I have a small fleet of Photons and a web app that polls the Particle Cloud API so that the user can see real-time weight data from a scale (the app polls about once every second).

When the announcement came out last month, I read the sentence that said Particle cloud API calls do not count against your Data Operations limit and breathed a sigh of relief. However, when I just checked my usage/billing dashboard, I saw that I’ve consumed 230k data operations in the first 1.5 weeks of my monthly cycle (with more customers coming on board). I then re-read the documentation and saw that every variable counts as a DO, and my API calls are returning a variable (I assume this is what’s incrementing my DOs, right?). Now, I’m feeling sick to my stomach.

Is my best option instead to send http POST requests directly from the Particle device itself to a third-party database on an interval (and poll my database), without using Particle.publish() or webhooks or any other integration? If I am sending the variable in the POST body, does this still count as a DO? Anyone else have any better ideas?

If it’s possible to circumvent the data operations limit in this way then I’ll give this a try, but it just seems an incredible shame to no longer be able to take advantage of Particle’s lovely REST API or publish integrations and clumsily do it in the firmware. Thank you.

I'm am not sure but my understanding is any data transfer outside of Particle.Publish, Particle.Subscribe, and reading variables do not count towards data operations. I'd think anyone using a high data throughput WiFi equipped device would avoid going through the Particle cloud for the main data stream for this reason similar to what you stated.

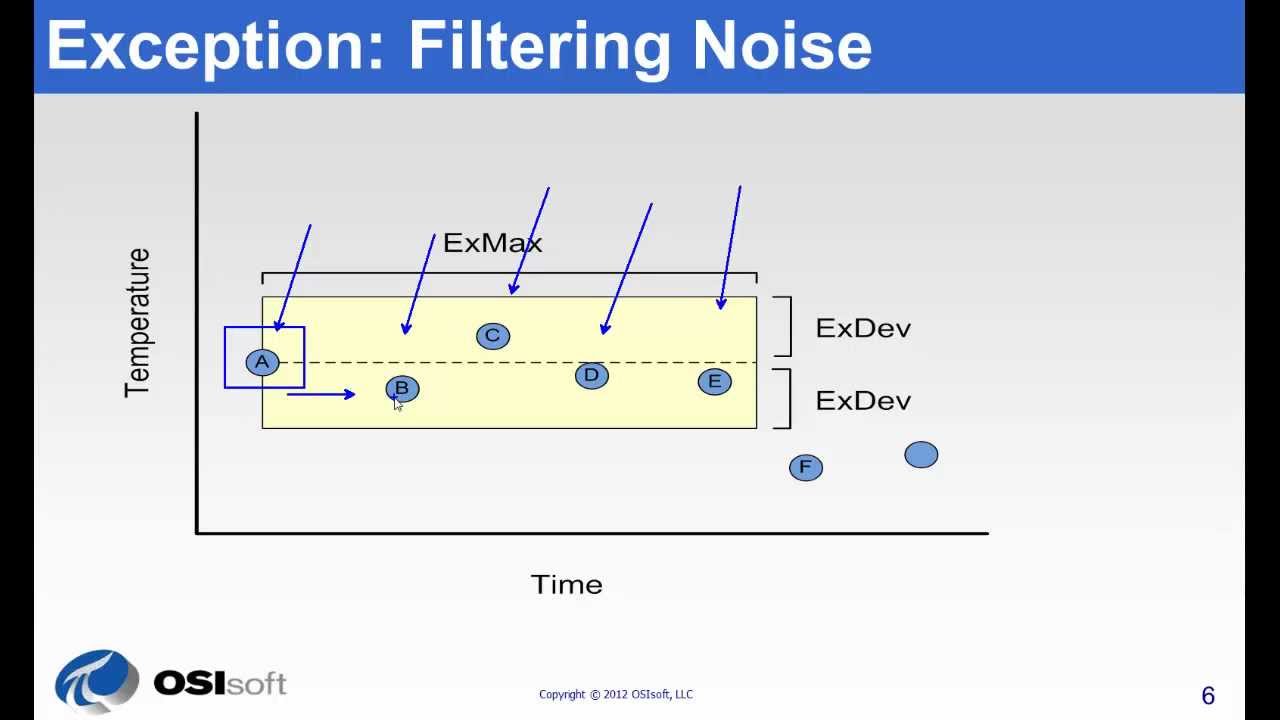

Something else you could consider is leveraging the concepts of Compression and Exception handling of the data within the firmware. This is terminology often used within time series data achievers. Basically it refers to only sending the data IF the data changed since the last time the data was sampled... Here's a video explaining the concept.

Basically, only send data IF the data changed. Otherwise, you can assume it's the same value as the prior data point. Just a concept... not sure if that would help your scenario or not.

2 Likes

@jgskarda thanks Jeff. I have explored this concept before, and the thing I keep running into is if I only publish when the data changes, if the user is seeing a weight in the front-end (with an older db timestamp), I don’t have a way to determine if this is the correct, true current weight from the serial device whose db value is not being updated because it’s unchanged, or if it’s actually because the Particle Cloud API or something else has borked.

In other words, without a 100% guarantee that every single publish event reaches my database, I can’t figure out how to make this work 100% of the time for my users without polling from the client side (in which case any 404 or 408 response immediately results in an invalid value so the user cannot act on it).

Perhaps someone with a better developer brain than me can figure out a workaround in this case?

I was able to get HttpClient.h going sending POST requests to my database directly from the Photon, but it appears to block until it receives the http response which may be too slow for my needs.

Hey,

What if you do NOT show the user the timestamp?

Also, what if you add a refresh button for those anxious users?

those would be two things I would try in this case.

Gustavo.

Thanks @gusgonnet, I’m not showing the user any timestamp currently, just the current weight (or invalid if there are issues with the server). But you’re right, I could make it so that the user taps a button to do a single request instead of continuously polling and automatically displaying in the current reading for them - this would change the user experience though. I’m hoping not to have to do that, but I’ll keep this idea in my back pocket. I appreciate it!