Ran these tests on a Xenon with device os rc.27

I know, I know, @rickkas7 says that Threading is evil and we should be using FSM’s instead. :-). But I like threading, so here is my question.

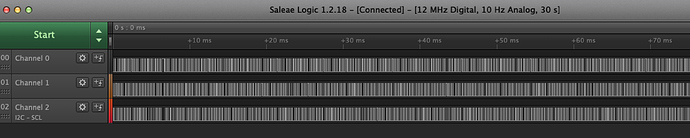

I have 3 threads running at the same priority that are constantly toggling a different GPIO. The purpose is to show, using a digital logic analyzer, that the multitasking is doing a round robin between each thread every 1ms.

My initial code uses the SINGLE_THREADED_BLOCK to toggle the GPIO so that when the thread loses the cpu to another thread, its GPIO will always be low.

Here is the code:

SYSTEM_MODE(MANUAL);

SYSTEM_THREAD(ENABLED);

uint8_t d4 = D4;

uint8_t d5 = D5;

uint8_t d6 = D6;

os_mutex_t myMutex;

os_thread_t thread1;

os_thread_t thread2;

os_thread_t thread3;

void myThread(void *arg) {

uint8_t* value = (uint8_t*)arg;

Serial.println("printing from thread, woo hoo");

Serial.println(*value);

while (1) {

SINGLE_THREADED_BLOCK() {

// os_mutex_lock(myMutex);

digitalWrite(*value, HIGH);

delayMicroseconds(20);

digitalWrite(*value, LOW);

// os_mutex_unlock(myMutex);

}

}

}

void setup() {

Serial.begin(9600);

waitFor(Serial.isConnected, 30000);

pinMode(D4, OUTPUT);

pinMode(D5, OUTPUT);

pinMode(D6, OUTPUT);

os_mutex_create(&myMutex);

Serial.println("hello world!!!");

os_thread_create(&thread1, "testThread1",OS_THREAD_PRIORITY_DEFAULT, myThread, &d4, 4096);

os_thread_create(&thread2, "testThread2",OS_THREAD_PRIORITY_DEFAULT, myThread, &d5, 4096);

os_thread_create(&thread3, "testThread3",OS_THREAD_PRIORITY_DEFAULT, myThread, &d6, 4096);

}

void loop() {

delay(100000);

}

The visible behavior on the logic analyzer looks perfect.

Now if I change the code to use a MUTEX rather than the SINGLE_THREADED_BLOCK, the behavior is not round robin and become inconsistent.

SYSTEM_MODE(MANUAL);

SYSTEM_THREAD(ENABLED);

uint8_t d4 = D4;

uint8_t d5 = D5;

uint8_t d6 = D6;

os_mutex_t myMutex;

os_thread_t thread1;

os_thread_t thread2;

os_thread_t thread3;

void myThread(void *arg) {

uint8_t* value = (uint8_t*)arg;

Serial.println("printing from thread, woo hoo");

Serial.println(*value);

while (1) {

// SINGLE_THREADED_BLOCK() {

os_mutex_lock(myMutex);

digitalWrite(*value, HIGH);

delayMicroseconds(20);

digitalWrite(*value, LOW);

os_mutex_unlock(myMutex);

// }

}

}

void setup() {

Serial.begin(9600);

waitFor(Serial.isConnected, 30000);

pinMode(D4, OUTPUT);

pinMode(D5, OUTPUT);

pinMode(D6, OUTPUT);

os_mutex_create(&myMutex);

os_thread_create(&thread1, "testThread1",OS_THREAD_PRIORITY_DEFAULT, myThread, &d4, 4096);

os_thread_create(&thread2, "testThread2",OS_THREAD_PRIORITY_DEFAULT, myThread, &d5, 4096);

os_thread_create(&thread3, "testThread3",OS_THREAD_PRIORITY_DEFAULT, myThread, &d6, 4096);

}

void loop() {

delay(100000);

}

Here is the logic analyzer trace.

Am I misundertanding how the mutex works or does this look like a bug?