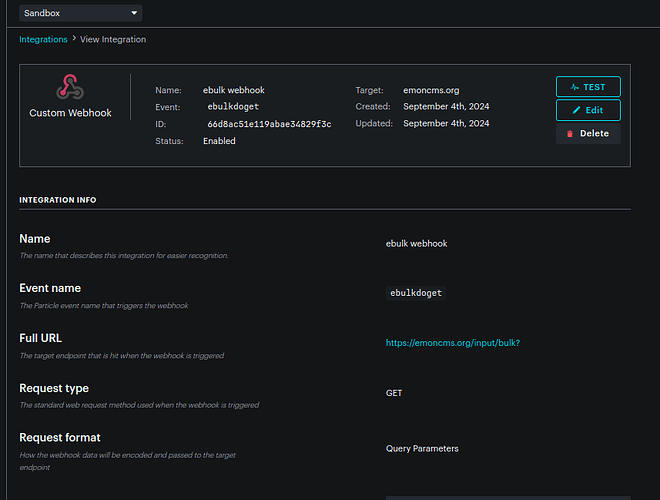

I'm using a webhook to store data to emoncms.org, but logging multiple feeds from multiple devices at 10sec rate quickly exceeds my allowable data operations. I want to do bulk uploads of my data.

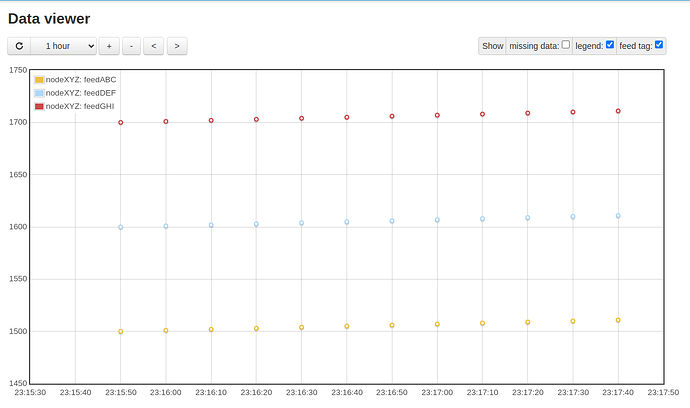

This URL stores 2 minutes of data from 3 different feeds at 10 sec sample rate:

https://emoncms.org/input/bulk?data=[

[10,"nodeXYZ",{"feedABC":1500},{"feedDEF":1600},{"feedGHI":1700}],

[20,"nodeXYZ",{"feedABC":1501},{"feedDEF":1601},{"feedGHI":1701}],

[30,"nodeXYZ",{"feedABC":1502},{"feedDEF":1602},{"feedGHI":1702}],

[40,"nodeXYZ",{"feedABC":1503},{"feedDEF":1603},{"feedGHI":1703}],

[50,"nodeXYZ",{"feedABC":1504},{"feedDEF":1604},{"feedGHI":1704}],

[60,"nodeXYZ",{"feedABC":1505},{"feedDEF":1605},{"feedGHI":1705}],

[70,"nodeXYZ",{"feedABC":1506},{"feedDEF":1606},{"feedGHI":1706}],

[80,"nodeXYZ",{"feedABC":1507},{"feedDEF":1607},{"feedGHI":1707}],

[90,"nodeXYZ",{"feedABC":1508},{"feedDEF":1608},{"feedGHI":1708}],

[100,"nodeXYZ",{"feedABC":1509},{"feedDEF":1609},{"feedGHI":1709}],

[110,"nodeXYZ",{"feedABC":1510},{"feedDEF":1610},{"feedGHI":1710}],

[120,"nodeXYZ",{"feedABC":1511},{"feedDEF":1611},{"feedGHI":1711}]

]

I am trying to adapt it to a situation where the node and feed labels are quite long - about 30 characters each and I will run out of space in my publish string.

Can I send something like this as my event data string:

`{

"t":10,

"n":"veryverylongnamednodeXYZ",

"f":["veryverylongfeedABC","veryverylongfeedDEF","veryverylongfeedGHI"],

"d":[

[1500,1501,1502,1503,1504,1505,1506,1507,1508,1509,1510,1511]

[1600,1601,1602,1603,1604,1605,1606,1607,1608,1609,1610,1611],

[1700,1701,1702,1703,1704,1705,1706,1707,1708,1709,1710,1711]

]

}`

And unpack the elements using Mustache notation to recreate URL like the one above?

The data sampling rate "t" is fixed.

I could fix the number of feeds and data elements but ideally they would both be variable.