@zach @jeiden @Dave

Thanks for the update and confirming this is being actively worked on.

For the people who are not needing to work with more than 100 devices and want a simple way to visualize the data live and over time it looks like the Azure IoT suite is best suited for them. It’s free up to a certain point.

For the people who want to create a product using the Electron or Photon and sell more than 100 units then the Azure Event Hub service is a robust solution that can handle up to 1 million incoming events every second.

Here is how I want to use Azure + Particle’s Photon & Electron products together to make a killer new product.

-

My Product will transmit system status info over Wifi or Cellular every 1-10 mins.

-

Azure Event Hubs receives the system status message and holds it in queue for an Azure Stream Analytics Job.

-

I have created an Azure Steam Analytics Job to take the incoming data in JSON format and push it into an Azure Table Database. I have one Azure Table Database that holds all the sensor data received and each product has a unique serial number added to each table database entry so it’s easy to search.

-

Personally I have no need or desire to use the Azure IoT Suite online web interface features because I prefer to use Microsoft Power BI to visualize all the data I have pushed into the Azure Table Database.

Microsoft Power BI has a desktop application that is free that allows you to create just about any custom data visualization that you could dream of by using a familiar Windows Office-like applications that PC users are familiar with. Microsoft Power BI makes it easy to pull data from Azure databases which make’s things easier on you when it comes to creating visualizations to make sense of the data you collected.

Microsoft Power BI has teamed up with PubNub to allow their clients incoming data streams to be viewed on a custom Power BI Dashboard in real time as they come in. This real-time data view on the Power BI dashboards is a key feature we need to get working with Particle devices in my opinion

Here is the article explaining how to setup a PubNub stream to show up live on a Microsoft Power BI dashboard.

The Power BI team is working on adding a way to view incoming live data just like you can with PubNub by creating another stream analytics function that pushes the incoming data to a live auto-updating dashboard widget that looks the same as the PubNub example above. Normally the quickest automatic update rate for data in the Power BI dashboards is every 15 mins or by refreshing the web page manually.

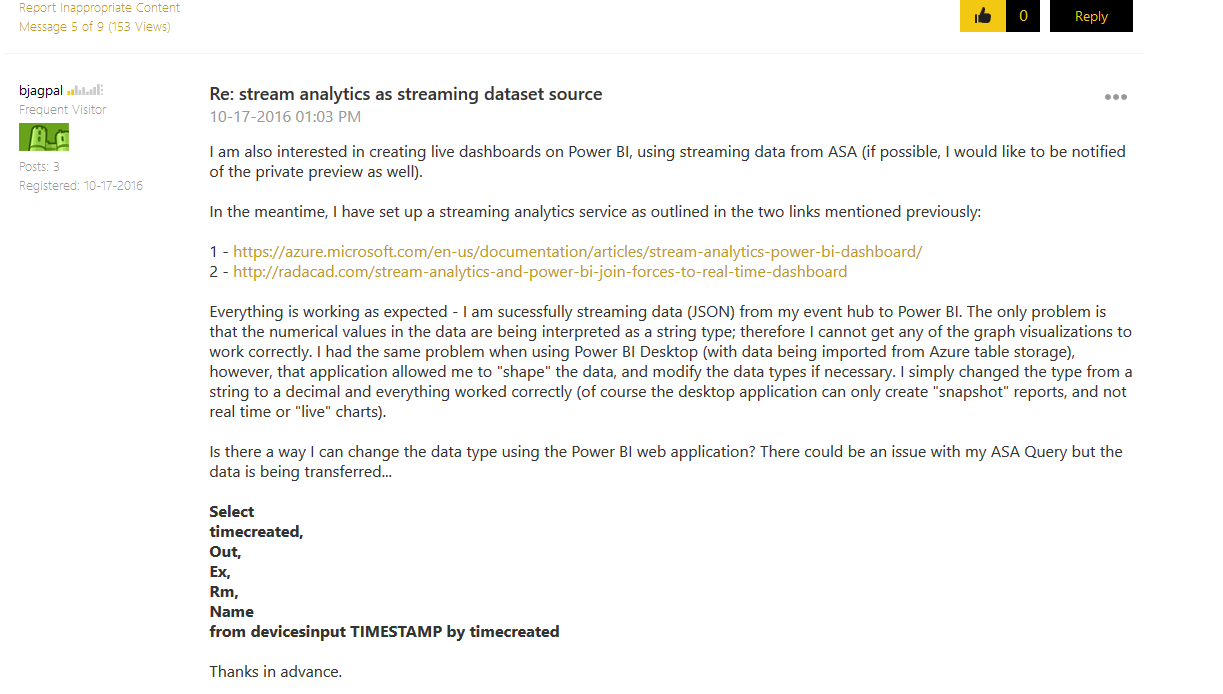

In this recent forum post @bjagpal was also looking for a way to show live data in a Power BI dashboard tile and created a post over on the Microsoft Power BI forum asking them about how to accomplish this.

See his post via this link: https://community.powerbi.com/t5/Integrations-with-Files-and/stream-analytics-as-streaming-dataset-source/m-p/68634/highlight/true#M5372

Here is the post on the forum where @bjagpal was talking about his same real-time dashboard tile issue. Microsoft Power BI + Particle = Awesome Custom Dashboards!

Once you create a custom dashboard, you can share it with the world via a slew of different ways provided by the Microsoft Power BI team. The Microsoft Power BI team is large and very active with constant improvements to the platform which makes me feel good as somebody who wants to use this as a reliable backend for my business and the clients who will use our products.

I have run some test to see what the cost is to send data to the Azure Event Hubs > Azure Stream Analytics > Azure Table Database and it seems like the more data you send per hour, the less each message cost. I guess this has to do with how they bill you for the cloud compute time per hour. I would love to understand better how the cloud compute time works and how to maximize the service cost.

Here are the key things that I think you guys can do for us who just want a reliable and scalable way to store data being transmitted by Photons & Electrons into a database.

One - Make it as easy as possible to add/authenticate new Photons & Electons to the Azure Event Hub Network. From what I have seen the Azure IoT Hub has provided the easiest way to add/ delete / authorize / deauthorize the Particle devices that will be sending data to Azure Event Hubs. This is not as easy to do using the Azure Event Hub method.

Two - Work with Microsoft Power BI to allow data being sent to Azure Event Hubs to also show up in a real time Power BI dashboard tile the same as PubNub is able to do. This will allow us to create custom dashboards that show real-time data being sent from Particle Devices. With the team Microsoft Power BI has it would be something they can get working in a week with ease and this is already in the works anyways.

Three - Based on my testing the cost to send data to the Azure services break down like this from highest to lowest in cost:

Highest Cost Service = Stream Analytics - Pushing Incoming Data into Azure Table Database.

Second Highest Cost Service = Azure Event Hubs - Receives all incoming Data without missing anything.

Third much lower cost Service = Azure Table Database - Holds all incoming data for however long you set it to hold it. I set it to hold data for 90 days and delete any data that is older than 90 days.

It would be really nice if there was some cheaper way to take the incoming data received by Auzre Event Hubs and then push that data into some Azure Database that is also easy to access via Microsoft Power BI so we can create dashboards to visualize the collected data.

It seems that Azure Stream Analytics is just overly expensive for just pushing the incoming data into a database but maybe that’s just the way it has to be if you want a service that can scale to handle up to 1 million incoming data streams per second. If you can eliminate or greatly reduce the cost that Azure Stream Analytics charges for pushing data into a database then that would be a really good thing for us small business owners & our clients who will have to cover these operating cost.

Sorry for the long winded response but you asked for some feedback, and I have some to give after playing around with this combo for a few weeks.

I have not found a better backend solution than Azure & Power BI for data visualization when it comes to providing a small business with an easy way to create a custom dashboard layout to fit perfectly with your custom product design and aesthetics.

I’m looking forward to seeing how this all progresses

Let me know if I can provide any more info.